We use the replace method to get rid of it and replace it with empty space.

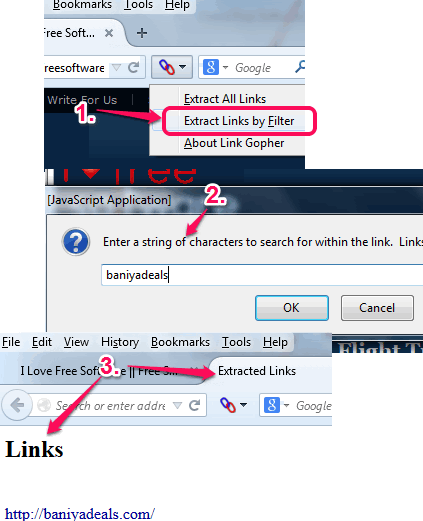

segment (Each site is different after all). The formatting on the returned URLs is rather weird, as it is preceded by a. The next line adds the base url into the returned URL to complete it. Basically this XPath expression will only locate URLs within headings of size h3. Hence we created an XPath expression, '//h3/a' to avoid any non-books URLs. Url = self.base_url + url.replace('./.', '')Īll the URLs of the books were located within heading tags. lynx -listonly -dump = [Rule( LinkExtractor(allow = 'books_1/'), Tip: Click the 'Lock' button to keep the current list of links even if you browse to other. Example: Type 'mp3' in the 'Quick find' field to find all mp3 files on the page.

#Extract all links from a web page zip#

Lynx a text based browser is perhaps the simplest. The links panel extracts all links in the current webpage so you can easily find images, music, video clips, zip files and other downloads, as well as links to other pages.

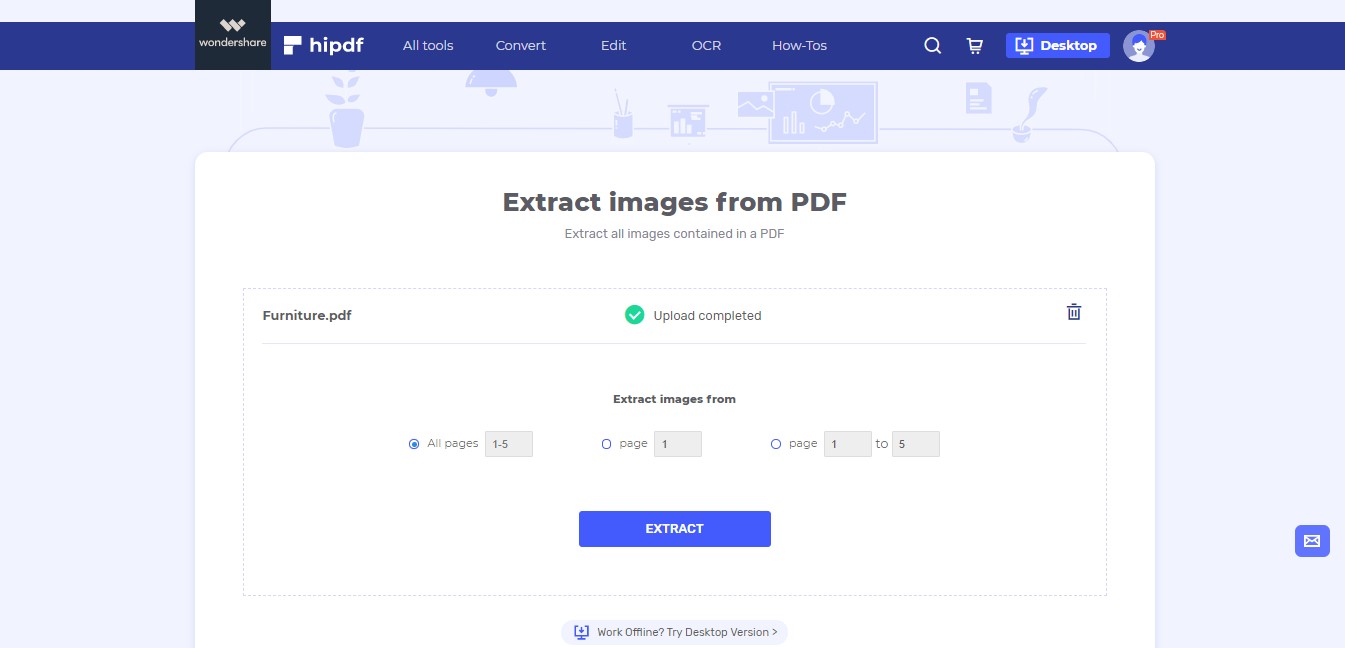

Running the tool locallyĮxtracting links from a page can be done with a number of open source command line tools. The API is simple to use and aims to be a quick reference tool like all our IP Tools there is a limit of 100 queries per day or you can increase the daily quota with a Membership. Rather than using the above form you can make a direct link to the following resource with the parameter of ?q set to the address you wish to extract links from.

API for the Extract Links ToolĪnother option for accessing the extract links tool is to use the API. It was first developed around 1992 and is capable of using old school Internet protocols, including Gopher and WAIS, along with the more commonly known HTTP, HTTPS, FTP, and NNTP. Being a text-based browser you will not be able to view graphics, however, it is a handy tool for reading text-based pages. Lynx can also be used for troubleshooting and testing web pages from the command line. You can feed this program several different text fragments or multi-line strings and the program will return all links in them. This is a text-based web browser popular on Linux based operating systems. The tool has been built with a simple and well-known command line tool Lynx.

#Extract all links from a web page free#

From Internet research, web page development to security assessments, and web page testing. Just paste OR Enter a valid URL in the free online link extractor tool. Search a list of web pages for URLs The output is 1 or more columns of the URL addresses. Reasons for using a tool such as this are wide-ranging. Use this tool to extract fully qualified URL addresses from web pages and data files. Listing links, domains, and resources that a page links to tell you a lot about the page. Link Extractor tool extracts all the web page URLs by using its source code. This tool allows a fast and easy way to scrape links from a web page. If it doesn't show up in the 'Advanced' settings you'll have to add a CSS selector by using DevTools (Chrome, Edge) or Inspector (Firefox). No Links Found About the Page Links Scraping Tool 1 ACCEPTED SOLUTION burque505 Helper V In response to Squeaky 03-22-2021 08:00 AM Squeaky, yes, right-click on the 'Next' button and set it as pager.

0 kommentar(er)

0 kommentar(er)